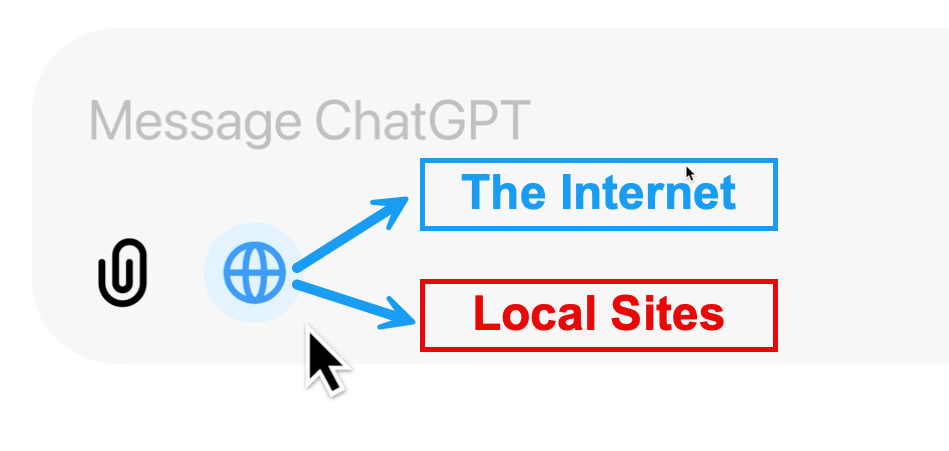

ChatGPT can use information from internal sites

The new ChatGPT Search feature, which is meant to “get fast, timely answers with links to relevant web sources” apparently can use information from internal sites, too. A first hint was given by S...

The new ChatGPT Search feature, which is meant to “get fast, timely answers with links to relevant web sources” apparently can use information from internal sites, too. A first hint was given by S...

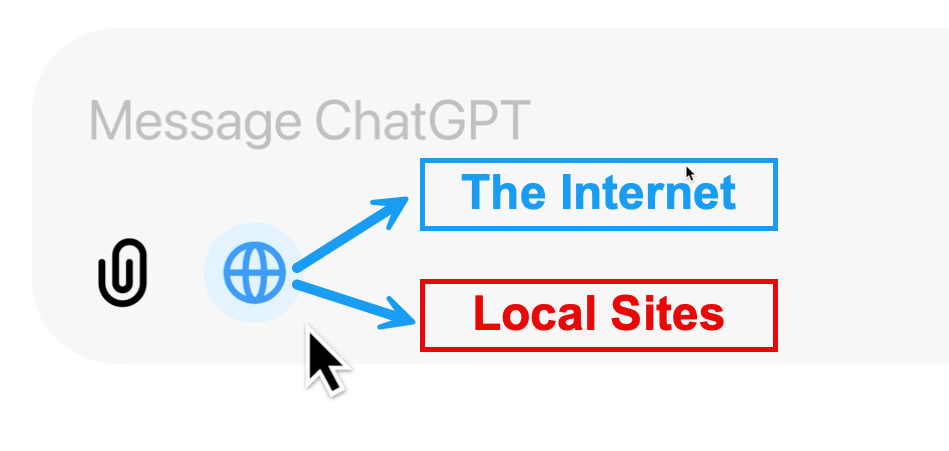

Yann LeCun has made an interesting statement in a talk he gave recently. I didn’t find the original source, but here is a snippet from Twitter: Predictions aside, I think the realization that AI...

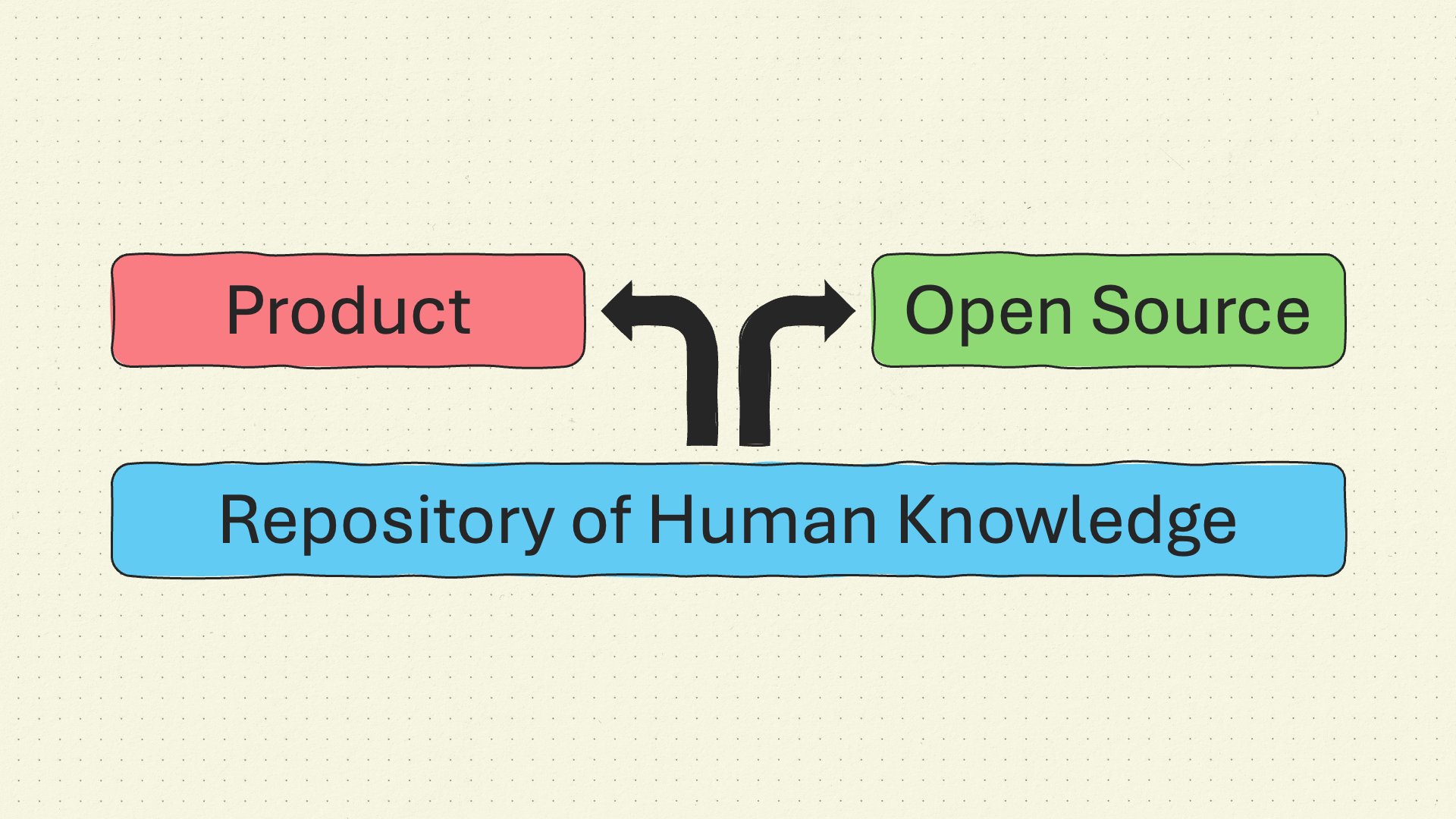

Here is a quick tip to make working with Dokku a bit easier. You can create an alias for the ssh command to save some typing when working with your Dokku server. Add the following line to your .b...

Today, I wanted to try a little experiment: In reaction to a tweet by Pieter Levels about preferring builder as opposed to talker, I created and launched a dead simple website called Builder Habit....

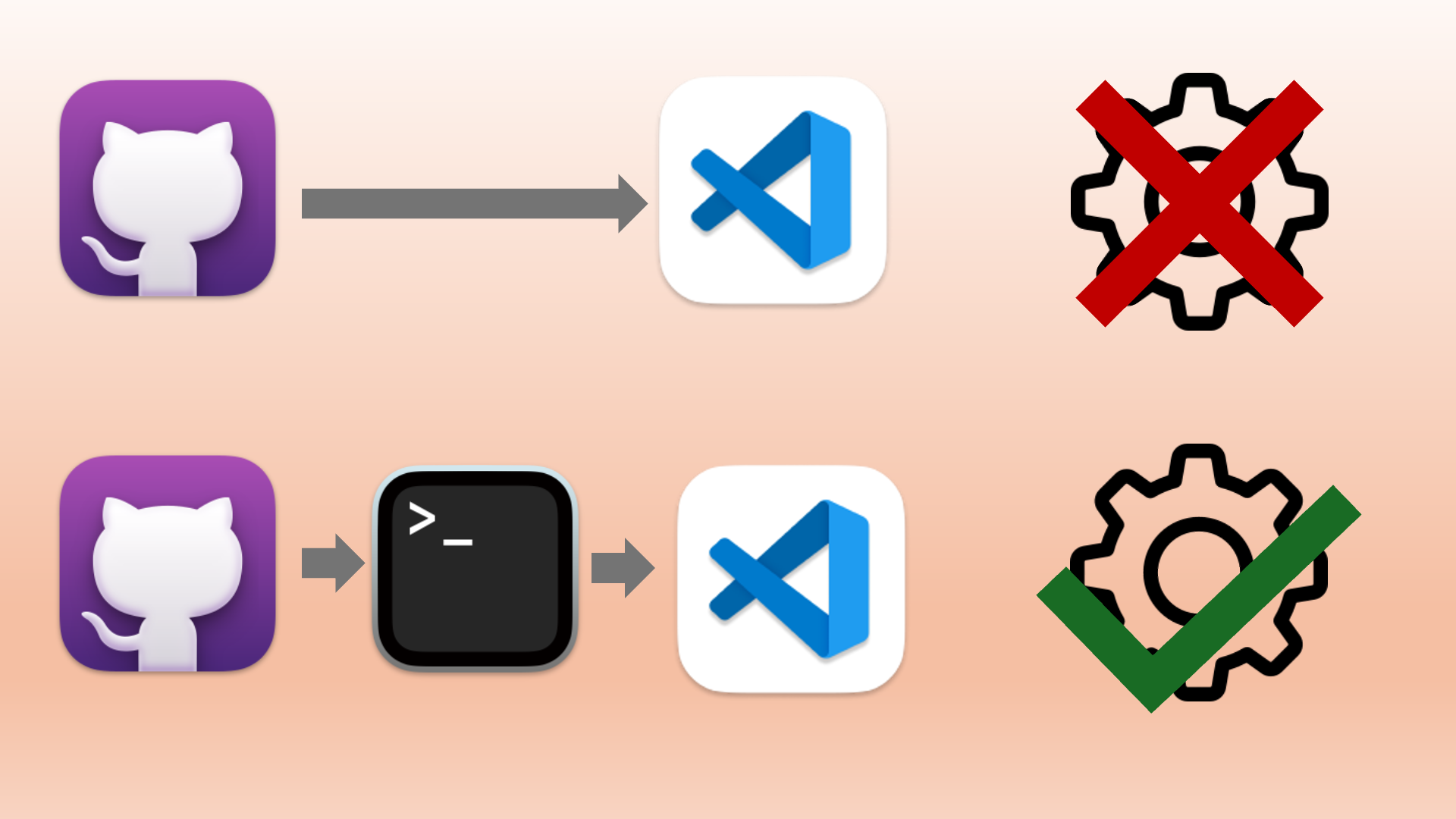

Quick tip: It’s much better to launch VS Code from the command line. Why? Because this way, the sessions’ environment configuration is used by VS Code, too, and you don’t need to configure the Pyth...

I am working on a SaaS app. Although I started it with FastAPI I decided to go back to Django since I’m using Django on and off since end of 2005 (really!) thus, I know it much better than FastAPI....

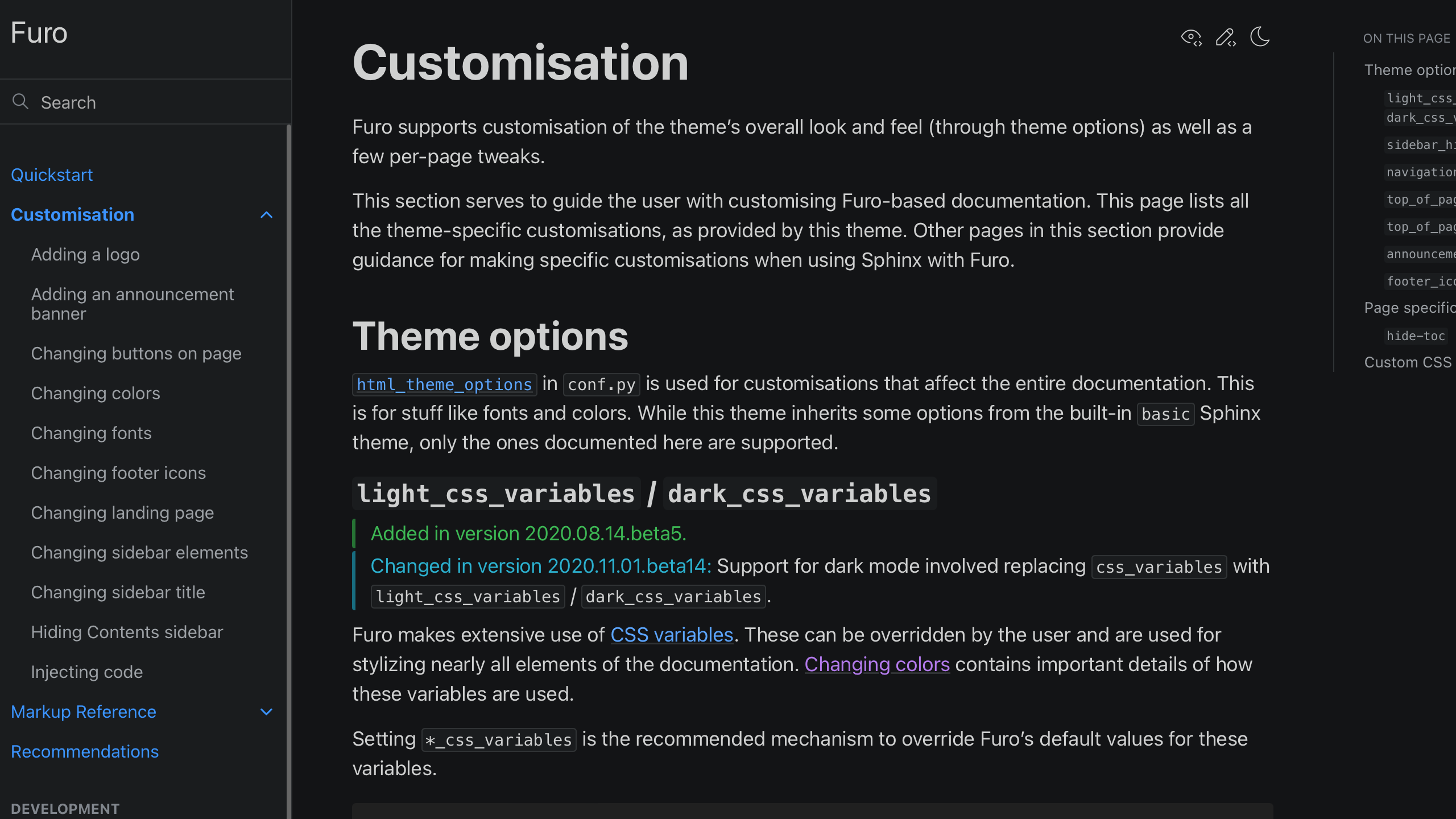

For documentation, I prefer Sphinx over static site builders like Hugo and Jekyll, as I can have one source for html, epub, and PDFs, create docs from Jupyter Notebooks with Jupyterbook, wr...

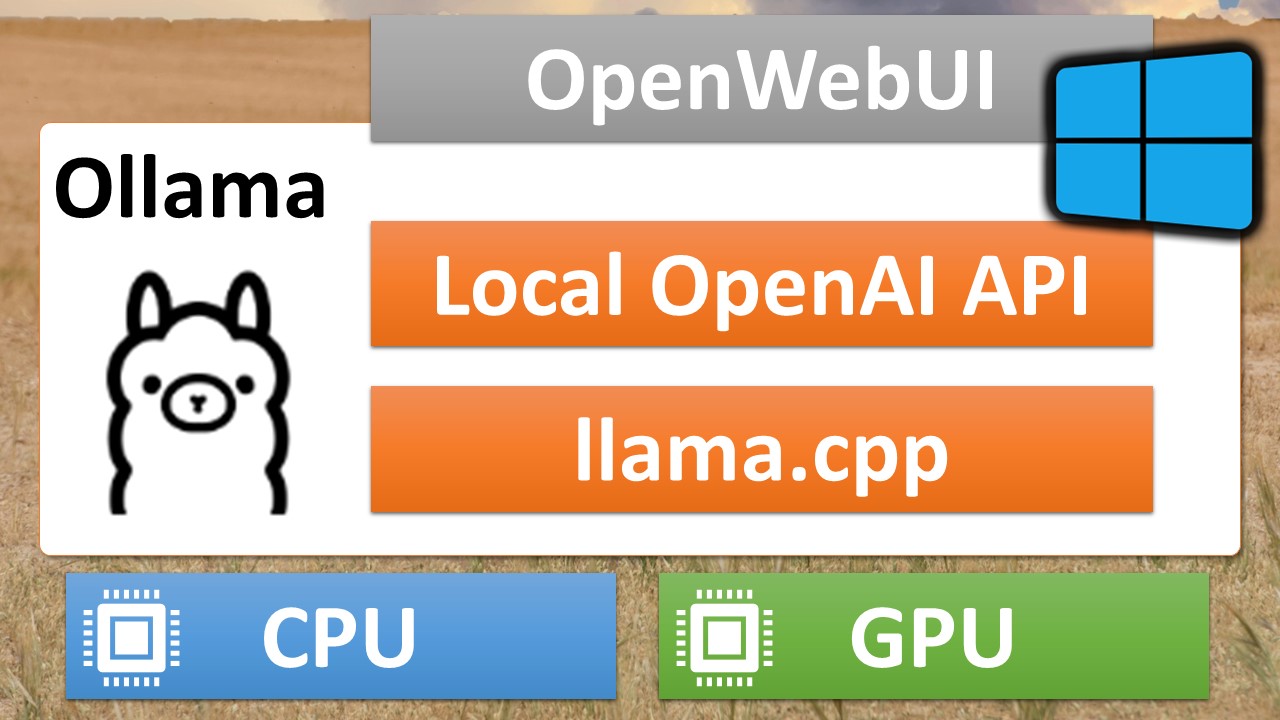

I wanted to load Ollama models onto a system with restricted internet access. Unfortunately, Ollama doesn’t yet support private registries or has a command for exporting models. As a workaround, ...

Ollama is one of the easiest ways to run large language models locally. Thanks to llama.cpp, it can run models on CPUs or GPUs, even older ones like my RTX 2070 Super. It provides a CLI and an Ope...

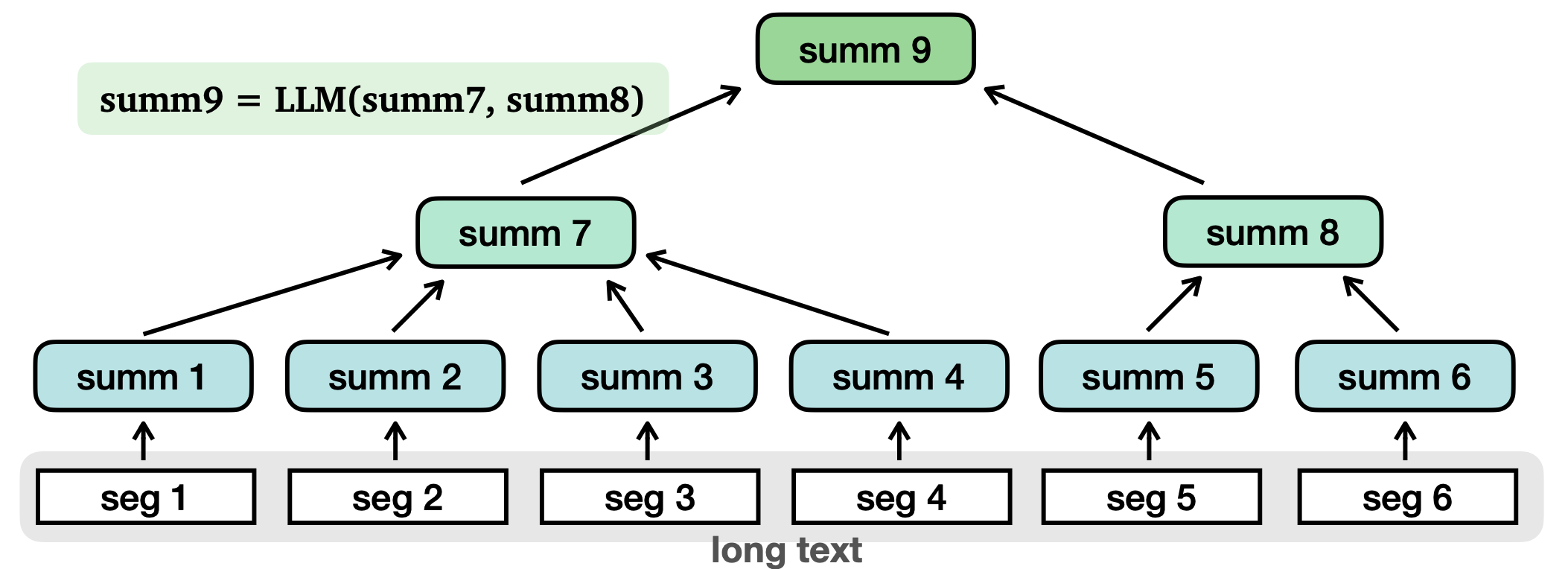

Large language models like GPT-3.5 and GPT-4 can summarize text quite well. But the text must fit into the context window. In this blog post and its acompanying video you will learn how to summariz...